When developing a web application, developers are often faced with the decision of whether to integrate caching during the initial build or wait until after launch. For those seeking to maximize performance, scalability, and user experience from the outset, implementing caching mechanisms during the development phase—rather than retrofitting them post-launch—is crucial. Caching isn’t merely an optimization afterthought; it’s a fundamental part of application architecture that, when properly integrated early, can deliver substantial benefits in terms of performance and scalability.

Why Integrate Caching During Development?

Integrating caching early in the development lifecycle enables developers to build a high-performing, scalable application from the start. Here’s a technical breakdown of why caching should be part of the development process rather than a post-launch add-on.

1. Performance Testing in Realistic Conditions

When caching is integrated from the beginning, it allows the development team to conduct performance testing in conditions that are closer to a production environment. By caching commonly accessed data, you can measure how caching strategies affect loading times, database query loads, and overall server resource usage.

For instance, database caching reduces the load on your database servers by storing frequently queried data in a fast-access cache layer, such as Redis or Memcached. This minimizes database calls during heavy load conditions and enables developers to identify and address bottlenecks well before real users experience them.

2. Enhanced Scalability for High Traffic Loads

One of the biggest challenges web applications face is scaling to meet user demand. Caching plays a vital role in achieving horizontal scalability, enabling applications to handle thousands or even millions of concurrent users without overloading backend services.

By implementing caching early, you gain the ability to simulate high-traffic scenarios and observe how well your caching solution performs under load. This is particularly beneficial when designing for redundancy and failover scenarios, as cache layers can be distributed across multiple nodes for high availability. Tools like load balancers and distributed caching layers provide robustness, ensuring your application can scale seamlessly under varying load conditions.

3. Structural Impact on Codebase and Data Flow

Caching is not a standalone feature; it affects the way you design data flow and access patterns across your codebase. The absence of caching during development may lead to inefficient data retrieval methods, which could result in convoluted caching solutions later on.

Consider query caching or application-level caching, where the results of expensive computations or data fetches are stored temporarily. These caching mechanisms necessitate specific data structures and design patterns to function effectively. By planning for caching during development, you can optimize your code to cache data intelligently—improving data flow efficiency and reducing the overhead involved in refactoring code later.

4. Improving User Experience (UX) from Day One

Performance is a critical component of user experience, and caching can significantly enhance page load times by reducing the need to repeatedly fetch data from backend servers. Slow applications drive users away, while fast, responsive applications improve user engagement and retention.

By implementing caching early, you can ensure that users experience a smooth, fast-loading application from launch. This is particularly important for content-heavy applications, such as e-commerce websites or social media platforms, where retrieving fresh content on demand can be resource-intensive. Techniques like page caching or full-page caching can pre-render pages and store them in memory for quick retrieval, drastically reducing load times for returning visitors.

5. Avoiding Technical Debt with a Well-Structured Cache

Adding caching to a live application often leads to hurried, ad-hoc implementations, resulting in technical debt that can become a maintenance burden. Introducing caching as a well-planned part of the development process ensures that your caching strategy is cohesive, maintainable, and efficient.

A cache-first design approach lets you consider factors like cache invalidation, expiry policies, and data consistency from the outset. For instance, without a structured approach, you may encounter issues such as cache stampedes, where multiple requests to populate a cache for the same data overwhelm your backend servers. Advanced techniques, such as “cache-aside” or “read-through” caching patterns, help mitigate such scenarios by controlling cache behavior programmatically.

6. Debugging and Testing Cache-Related Issues During Development

Caching introduces its own set of complexities, such as potential stale data, cache invalidation challenges, and race conditions. These issues are easier to resolve during development when the codebase is still flexible. Testing cache invalidation strategies—such as ensuring that updated data reflects correctly without manual cache clears—requires rigorous testing environments and debugging tools.

By testing these scenarios early, you can avoid costly fixes and user-facing issues post-launch. Integration with monitoring tools like Prometheus or Grafana to track cache hits, misses, and latency also becomes simpler if caching is part of the initial architecture.

7. Speeding Up Development Cycles

An often overlooked benefit of caching in development is that it can improve the efficiency of the development process itself. By caching heavy database queries and resource-intensive computations, developers can benefit from faster feedback loops during testing.

For instance, local caching in development environments allows quick iterations without repeatedly querying the database, which speeds up the cycle of building, testing, and debugging features. This is especially useful for applications relying on complex data processing or machine learning models that are computationally expensive to load on each request.

Technical Best Practices for Integrating Caching

To ensure efficient caching during development, follow these technical best practices:

Configurable Caching Options: Make caching strategies configurable by environment. For example, enable aggressive caching in production but allow cache control in development and staging environments.

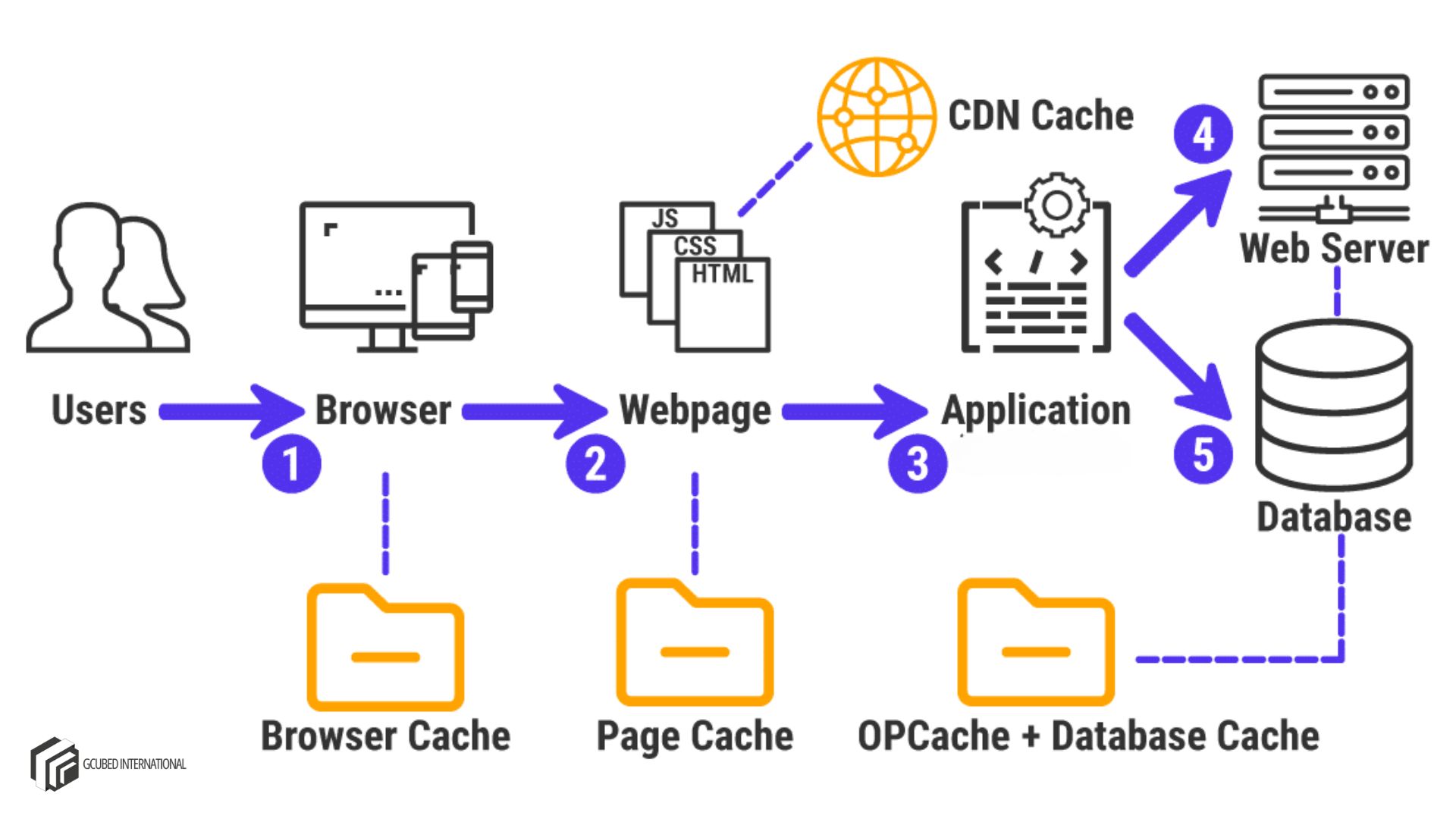

Leverage Different Caching Levels:

- Query Caching: Cache frequently run database queries at the database or ORM layer.

- Application-Level Caching: Cache application-specific data, such as user sessions or computed results, using in-memory stores like Redis or Memcached.

- Page Caching: For static or semi-static pages, use full-page caching solutions to reduce backend load.

Set Expiry and Invalidation Policies:

- Implement automatic cache expiry policies to prevent serving stale data. Use time-based expiry (TTL) for less frequently changing data and event-based invalidation for data that changes often.

- Consider cache warming techniques to pre-populate caches with high-demand data immediately after application restarts.

Cache Consistency & Data Synchronization:

- Use write-through caching for critical data where consistency is paramount. This ensures that data is written to both the cache and the database in real time.

- For applications that rely on eventual consistency, implement cache-aside strategies, but handle edge cases to ensure users receive accurate data.

Monitoring & Observability:

- Integrate cache monitoring solutions to track cache hit/miss ratios, latency, and cache evictions.

- Use tools like Grafana or Kibana to visualize cache metrics, which can help identify performance improvements and potential issues in real time.

In modern web applications, caching is not simply an optimization to be tacked on post-launch. By integrating caching early in the development process, developers can design a scalable, high-performance application architecture that provides a seamless user experience from day one. Pre-launch caching integration allows you to measure real-world performance, optimize data flow, structure efficient code, and avoid technical debt.

From caching database queries and application data to implementing page caching and distributed caching solutions, a thoughtfully integrated caching strategy can help your application handle high traffic loads, reduce server strain, and deliver faster response times. By following caching best practices during development, you’ll create a robust application that not only performs well at launch but scales effortlessly as demand grows.

Have Any Project Plan In Your Mind?

Get in touch with us for expert consultancy to enhance your digital presence and boost your sales.

-

Mail us 24/7: hello@gcubedinternational.com

-

Call Us: +1 619-535-1715

I really appreciate this informational article. Thank you!